SenseVoice:语音识别模型,这个模型可以识别多种语言的语音,识别说话人的情感,检测音频中的特殊事件(比如音乐、笑声等)。它可以快速而准确地转录语音内容。

CosyVoice:语音生成模式,这个模型主要生成自然目情感丰富的语音。它可以模仿不同的说话人,甚至可以用几秒钟的音频样本来克隆一个人的声音。

SenseVoice 主要专注于多语言语音识别、情感识别和音频事件检测,提供高精度、低延迟的语音处理能力。CosyVoice 则侧重于自然语音生成和控制,支持多种语言、音色和说话风格的生成,能够实现零样本学习和细粒度的语音控制。这两者结合,使得 FunAudioLLM 能够在多种应用场景下提供卓越的语音交互体验。

安装

克隆并安装

- 克隆仓库

git clone --recursive https://github.com/FunAudioLLM/CosyVoice.git

# If you failed to clone submodule due to network failures, please run following command until success

cd CosyVoice

git submodule update --init --recursive

- 安装 Conda:请参阅https://docs.conda.io/en/latest/miniconda.html

- 创建 Conda 环境:

conda create -n cosyvoice -y python=3.10

conda activate cosyvoice

# pynini is required by WeTextProcessing, use conda to install it as it can be executed on all platform.

conda install -y -c conda-forge pynini==2.1.5

pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/ --trusted-host=mirrors.aliyun.com

# If you encounter sox compatibility issues

# ubuntu

sudo apt-get install sox libsox-dev

# centos

sudo yum install sox sox-devel

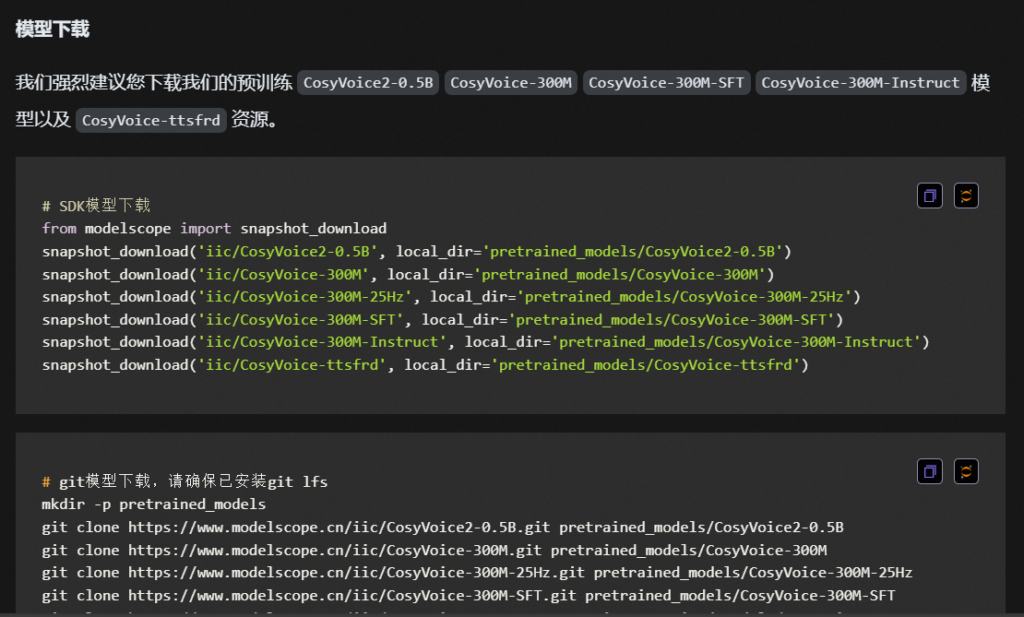

模型下载

我们强烈建议您下载我们的预训练CosyVoice2-0.5B CosyVoice-300M CosyVoice-300M-SFT CosyVoice-300M-Instruct模型和CosyVoice-ttsfrd资源。

# SDK模型下载

from modelscope import snapshot_download

snapshot_download('iic/CosyVoice2-0.5B', local_dir='pretrained_models/CosyVoice2-0.5B')

snapshot_download('iic/CosyVoice-300M', local_dir='pretrained_models/CosyVoice-300M')

snapshot_download('iic/CosyVoice-300M-25Hz', local_dir='pretrained_models/CosyVoice-300M-25Hz')

snapshot_download('iic/CosyVoice-300M-SFT', local_dir='pretrained_models/CosyVoice-300M-SFT')

snapshot_download('iic/CosyVoice-300M-Instruct', local_dir='pretrained_models/CosyVoice-300M-Instruct')

snapshot_download('iic/CosyVoice-ttsfrd', local_dir='pretrained_models/CosyVoice-ttsfrd')

# git模型下载,请确保已安装git lfs

mkdir -p pretrained_models

git clone https://www.modelscope.cn/iic/CosyVoice2-0.5B.git pretrained_models/CosyVoice2-0.5B

git clone https://www.modelscope.cn/iic/CosyVoice-300M.git pretrained_models/CosyVoice-300M

git clone https://www.modelscope.cn/iic/CosyVoice-300M-25Hz.git pretrained_models/CosyVoice-300M-25Hz

git clone https://www.modelscope.cn/iic/CosyVoice-300M-SFT.git pretrained_models/CosyVoice-300M-SFT

git clone https://www.modelscope.cn/iic/CosyVoice-300M-Instruct.git pretrained_models/CosyVoice-300M-Instruct

git clone https://www.modelscope.cn/iic/CosyVoice-ttsfrd.git pretrained_models/CosyVoice-ttsfrd

或者,您可以解压缩ttsfrd资源并安装ttsfrd包以获得更好的文本规范化性能。

注意,这一步不是必须的,如果你没有安装ttsfrd,我们默认使用WeTextProcessing。

cd pretrained_models/CosyVoice-ttsfrd/

unzip resource.zip -d .

pip install ttsfrd_dependency-0.1-py3-none-any.whl

pip install ttsfrd-0.4.2-cp310-cp310-linux_x86_64.whl

基本用法

为了获得更好的性能,我们强烈建议使用CosyVoice2-0.5B。每个模型的详细用法请参见下面的代码。

import sys

sys.path.append('third_party/Matcha-TTS')

from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

from cosyvoice.utils.file_utils import load_wav

import torchaudio

CosyVoice2 使用方法

cosyvoice = CosyVoice2('pretrained_models/CosyVoice2-0.5B', load_jit=False, load_trt=False, fp16=False)

# NOTE if you want to reproduce the results on https://funaudiollm.github.io/cosyvoice2, please add text_frontend=False during inference

# zero_shot usage

prompt_speech_16k = load_wav('./asset/zero_shot_prompt.wav', 16000)

for i, j in enumerate(cosyvoice.inference_zero_shot('收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。', '希望你以后能够做的比我还好呦。', prompt_speech_16k, stream=False)):

torchaudio.save('zero_shot_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# fine grained control, for supported control, check cosyvoice/tokenizer/tokenizer.py#L248

for i, j in enumerate(cosyvoice.inference_cross_lingual('在他讲述那个荒诞故事的过程中,他突然[laughter]停下来,因为他自己也被逗笑了[laughter]。', prompt_speech_16k, stream=False)):

torchaudio.save('fine_grained_control_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# instruct usage

for i, j in enumerate(cosyvoice.inference_instruct2('收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。', '用四川话说这句话', prompt_speech_16k, stream=False)):

torchaudio.save('instruct_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# bistream usage, you can use generator as input, this is useful when using text llm model as input

# NOTE you should still have some basic sentence split logic because llm can not handle arbitrary sentence length

def text_generator():

yield '收到好友从远方寄来的生日礼物,'

yield '那份意外的惊喜与深深的祝福'

yield '让我心中充满了甜蜜的快乐,'

yield '笑容如花儿般绽放。'

for i, j in enumerate(cosyvoice.inference_zero_shot(text_generator(), '希望你以后能够做的比我还好呦。', prompt_speech_16k, stream=False)):

torchaudio.save('zero_shot_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

CosyVoice 使用方法

cosyvoice = CosyVoice('pretrained_models/CosyVoice-300M-SFT', load_jit=False, load_trt=False, fp16=False)

# sft usage

print(cosyvoice.list_available_spks())

# change stream=True for chunk stream inference

for i, j in enumerate(cosyvoice.inference_sft('你好,我是通义生成式语音大模型,请问有什么可以帮您的吗?', '中文女', stream=False)):

torchaudio.save('sft_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

cosyvoice = CosyVoice('pretrained_models/CosyVoice-300M') # or change to pretrained_models/CosyVoice-300M-25Hz for 25Hz inference

# zero_shot usage, <|zh|><|en|><|jp|><|yue|><|ko|> for Chinese/English/Japanese/Cantonese/Korean

prompt_speech_16k = load_wav('./asset/zero_shot_prompt.wav', 16000)

for i, j in enumerate(cosyvoice.inference_zero_shot('收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。', '希望你以后能够做的比我还好呦。', prompt_speech_16k, stream=False)):

torchaudio.save('zero_shot_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# cross_lingual usage

prompt_speech_16k = load_wav('./asset/cross_lingual_prompt.wav', 16000)

for i, j in enumerate(cosyvoice.inference_cross_lingual('<|en|>And then later on, fully acquiring that company. So keeping management in line, interest in line with the asset that\'s coming into the family is a reason why sometimes we don\'t buy the whole thing.', prompt_speech_16k, stream=False)):

torchaudio.save('cross_lingual_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# vc usage

prompt_speech_16k = load_wav('./asset/zero_shot_prompt.wav', 16000)

source_speech_16k = load_wav('./asset/cross_lingual_prompt.wav', 16000)

for i, j in enumerate(cosyvoice.inference_vc(source_speech_16k, prompt_speech_16k, stream=False)):

torchaudio.save('vc_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

cosyvoice = CosyVoice('pretrained_models/CosyVoice-300M-Instruct')

# instruct usage, support <laughter></laughter><strong></strong>[laughter][breath]

for i, j in enumerate(cosyvoice.inference_instruct('在面对挑战时,他展现了非凡的<strong>勇气</strong>与<strong>智慧</strong>。', '中文男', 'Theo \'Crimson\', is a fiery, passionate rebel leader. Fights with fervor for justice, but struggles with impulsiveness.', stream=False)):

torchaudio.save('instruct_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

开始网页演示

您可以使用我们的网页演示页面快速熟悉CosyVoice。

详细信息请参阅演示网站。

# change iic/CosyVoice-300M-SFT for sft inference, or iic/CosyVoice-300M-Instruct for instruct inference

python3 webui.py --port 50000 --model_dir pretrained_models/CosyVoice-300M

高级用法

对于高级用户,我们提供了训练和推理脚本examples/libritts/cosyvoice/run.sh。

为部署而构建

或者,如果您想要服务部署,您可以运行以下步骤。

cd runtime/python

docker build -t cosyvoice:v1.0 .

# change iic/CosyVoice-300M to iic/CosyVoice-300M-Instruct if you want to use instruct inference

# for grpc usage

docker run -d --runtime=nvidia -p 50000:50000 cosyvoice:v1.0 /bin/bash -c "cd /opt/CosyVoice/CosyVoice/runtime/python/grpc && python3 server.py --port 50000 --max_conc 4 --model_dir iic/CosyVoice-300M && sleep infinity"

cd grpc && python3 client.py --port 50000 --mode <sft|zero_shot|cross_lingual|instruct>

# for fastapi usage

docker run -d --runtime=nvidia -p 50000:50000 cosyvoice:v1.0 /bin/bash -c "cd /opt/CosyVoice/CosyVoice/runtime/python/fastapi && python3 server.py --port 50000 --model_dir iic/CosyVoice-300M && sleep infinity"

cd fastapi && python3 client.py --port 50000 --mode <sft|zero_shot|cross_lingual|instruct>

1. 克隆项目

确保你的设备上安装了Git Git – Downloads

git clone --recursive https://github.com/FunAudioLLM/CosyVoice.git

cd CosyVoice

git submodule update --init --recursive2. 创建虚拟环境

尝试过直接在windows直接安装pynini,编译过程中缺少一个Linux独有的OpenFST关键依赖库。因此必须通过Conda实现安装。

安装 Conda / MiniConda: 请参阅 Download Now | Anaconda

安装后在Win开始菜单中找到 Anaconda Prompt 并打开,此时你的终端应该是 :

(base) C:\User\Administrator >

conda create -n cosyvoice python=3.10

conda activate cosyvoice

conda install -y -c conda-forge pynini==2.1.5注意在执行 conda activate cosyvoice 后,确保你的终端 (base)变成 (cosyvoice)再执行下一步安装,否则会安装在系统Python中。

3. 安装所需库

直接安装就行了,如果出现缺少了哪个库就手动安装一下。比如(Cython)

pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/ --trusted-host=mirrors.aliyun.com4. 下载模型

CosyVoice语音生成大模型2.0-0.5B · 模型库 官网的介绍给我写的懵懵的,如下图:

但我们只要部署 CosyVoice2 – 0.5B,只用下载第一个模型就可以了。如果你需要下载其他版本请自行操作。(直接全部复制粘贴下了快一个小时了,阿里源都给我下限速了)

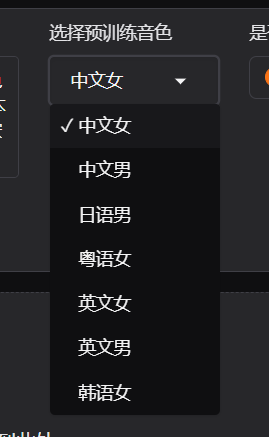

注:Webui支持4种不同的推理模式:预训练音色、3s极速复刻、跨语种复刻、自然语言控制,以往的CosyVoice1要实现以上功能需要分为4个模型。现在CosyVoice2 – 0.5B一个模型就能完成四种功能!

其中,自然语言控制在WebUI中使用受限,如需体验完整功能请使用代码推理或等待官方后续更新。或者参阅新一篇文章中的解决方案:CosyVoice2实现音色保存及推理 < Ping通途说

from modelscope import snapshot_download

snapshot_download('iic/CosyVoice2-0.5B', local_dir='pretrained_models/CosyVoice2-0.5B')5. 测试

官方原文档给的是CosyVoice1.0的使用方法,我们先以启动WebUI为例。

打开webui.py的源码,可以看到默认加载的就是CosyVoice2的模型。直接运行python webui.py 即可。

可能出现的问题

如果启动时出现pydoc.ErrorDuringImport: problem in cosyvoice.flow.flow_matching - ModuleNotFoundError: No module named 'matcha'错误,请检查CosyVoice-main\third_party\Matcha-TTS 下是否有文件,在官方Git项目中这一块是使用了外链。如果没有请自行下载压缩包并解压

Matcha-TTS/configs at dd9105b34bf2be2230f4aa1e4769fb586a3c824e · shivammehta25/Matcha-TTS · GitHub

重新运行webui.py,可以看到成功进入webui界面。

预训练音色为空的情况:

CosyVoice2-0.5B 没有 spk2info.pt ·议题 #729 ·FunAudioLLM/CosyVoice

根据上面的issus,需要手动下载spk2info.pt文件粘贴到pretrained_models/CosyVoice2-0.5B中,随后重新运行webui.py就能看到预训练模型:

以《饿殍·明末千里行》中满穗配音为基准进行各项测试,测试结果如下:

原声:

我知道,那件事之后,良爷可能觉得有些事都是老天定的,人怎么做都没用,但我觉得不是这样的。

3s急速复刻:

CosyVoice 2.0 已发布!与 1.0 版相比,新版本提供了更准确、更稳定、更快和更好的语音生成能力。

有情感的语音生成:

在他讲述那个荒诞故事的过程中,他突然[laughter]停下来,因为他自己也被逗笑了[laughter]。

方言控制:

用四川话说这句话 | 收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。

6. 最小化部署

成功运行了环境是好事,但不可能每次都要使用webui来手动转换音频。走过所有部署流程后,我们应该都知道哪些东西是没有必要安装的。

根据官方提供的使用案例,可以通过以下代码直接进行生成。

import sys

sys.path.append('third_party/Matcha-TTS')

from cosyvoice.cli.cosyvoice import CosyVoice, CosyVoice2

from cosyvoice.utils.file_utils import load_wav

import torchaudio

cosyvoice = CosyVoice2('pretrained_models/CosyVoice2-0.5B', load_jit=False, load_trt=False, fp16=False)

# 3秒复刻

prompt_speech_16k = load_wav('zero_shot_prompt.wav', 16000)

for i, j in enumerate(cosyvoice.inference_zero_shot('收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。', '希望你以后能够做的比我还好呦。', prompt_speech_16k, stream=False)):

torchaudio.save('zero_shot_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# 精细微调,即情感生成

for i, j in enumerate(cosyvoice.inference_cross_lingual('在他讲述那个荒诞故事的过程中,他突然[laughter]停下来,因为他自己也被逗笑了[laughter]。', prompt_speech_16k, stream=False)):

torchaudio.save('fine_grained_control_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)

# 指导生成

for i, j in enumerate(cosyvoice.inference_instruct2('收到好友从远方寄来的生日礼物,那份意外的惊喜与深深的祝福让我心中充满了甜蜜的快乐,笑容如花儿般绽放。', '用四川话说这句话', prompt_speech_16k, stream=False)):

torchaudio.save('instruct_{}.wav'.format(i), j['tts_speech'], cosyvoice.sample_rate)因此最小化部署CosyVoice2 – 0.5B,我们只需要准备以下依赖:

- main.py – 你的代码

- third_party/Matcha-TTS – CosyVoice2实现的关键模块

- requirements.txt – 项目所需的库

- pretrained_models/CosyVoice2-0.5B – CosyVoice2模型

- Pynini – 主要用于文本检查和语义纠错。conda install -y -c conda-forge pynini==2.1.5

附requirements.txt,仅供参考。

--extra-index-url https://download.pytorch.org/whl/cu121

--extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/ # https://github.com/microsoft/onnxruntime/issues/21684

conformer==0.3.2

diffusers==0.29.0

gdown==5.1.0

gradio==5.4.0

grpcio==1.57.0

grpcio-tools==1.57.0

hydra-core==1.3.2

HyperPyYAML==1.2.2

inflect==7.3.1

librosa==0.10.2

lightning==2.2.4

matplotlib==3.7.5

modelscope==1.15.0

networkx==3.1

omegaconf==2.3.0

onnx==1.16.0

onnxruntime==1.18.0

openai-whisper==20231117

protobuf==4.25

pydantic==2.7.0

pyworld==0.3.4

rich==13.7.1

soundfile==0.12.1

torch==2.3.1

torchaudio==2.3.1

transformers==4.40.1

WeTextProcessing==1.0.3